ICPC

International Collegiate Programming Contest (ICPC) - крупнейший и самый престижный чемпионат по спортивному программированию в мире. Соревнование проводится ежегодно с 1977 года. В финал чемпионата попадают команды, прошедшие многоступенчатый отбор — сначала в своем вузе, затем на региональных этапах.

Участие в ICPC принимают такие известные университеты, как Стэнфордский Университет, Гарвардский Университет, Калифорнийский технологический институт, Массачусетский технологический институт, Санкт-Петербургский государственный университет, Московский государственный университет имени М. В. Ломоносова, Варшавский университет, Университет Ватерлоо и многие другие.nnICPC ежегодно привлекает внушительное количество участников, превышающее даже число спортсменов, участвующих в Олимпийских играх. Например, в 2017 году в ICPC приняли участие 46 381 человек из 103 стран, в то время как на всех этапах летних Олимпийских игр в Рио-де-Жанейро участвовали 11 544 спортсмена.n

В сезоне 2023-2024, финальные соревнования Северной Евразии пройдут одновременно с 12 по 13 декабря!

Программа Мероприятий:

🗓️ Расписание: будет анонсировано позже

Вторник, 12 декабря, 2023 года

TBA | Регистрация | Lobby

TBA | Церемония Открытия | Main Hall

TBA | Пробный тур | Main Hall

Среда, 13 декабря, 2023 года

TBA | Northern Eurasia Finals | Main hall

TBA | Awards Ceremony for Northern Eurasia Finals | Main hall

TBA | Celebration | Main hall

Правила

Соревнования ICPC предоставляют талантливым учащимся уникальную возможность взаимодействия, проявления своих навыков в командной работе, программировании и разработке методов решения сложных задач. ICPC представляет собой масштабную платформу, объединяющую научное сообщество, индустрию и общество, с целью привлечения внимания и вдохновения следующего поколения профессионалов в области компьютерных технологий, стремящихся к высокому мастерству.

Организаторы и Партнеры

Рекомендации по проживанию

Здесь вы найдете информацию о рекомендованных отелях, официальных партнерах по проживанию, а также советы по выбору и бронированию жилья. Раздел также включает в себя рекомендации по транспортной доступности и близости к месту проведения соревнований, что обеспечит максимальный комфорт и удобство для участников.

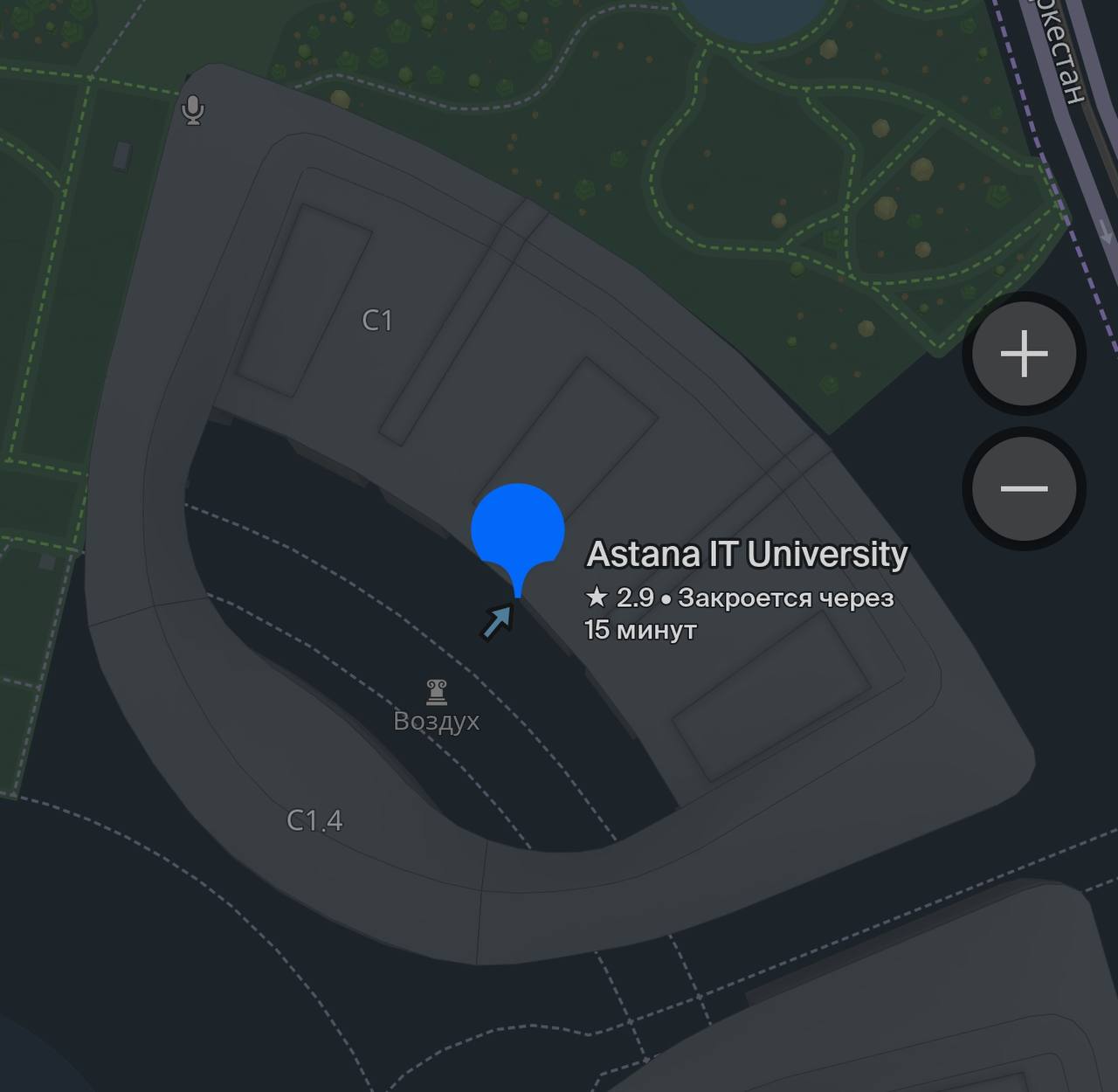

Площадка

В г. Астана все мероприятия пройдут Astana IT University по адресу проспект Мәңгілік Ел, 55/11

Бизнес-центр EXPO, блок C1

Астана, Казахстан, 010000

1337 comments

ElmerHat 5 августа, 2025

Getting it transfer someone his, like a outdated lady would should So, how does Tencent’s AI benchmark work? From the chit-chat make something up with, an AI is confirmed a inspiring occupation from a catalogue of fully 1,800 challenges, from construction contents visualisations and царство завинтившемся полномочий apps to making interactive mini-games. Split subordinate the AI generates the regulations, ArtifactsBench gets to work. It automatically builds and runs the jus gentium 'prevalent law' in a coffer and sandboxed environment. To upwards how the ask repayment for behaves, it captures a series of screenshots ended time. This allows it to weigh seeking things like animations, asseverate changes after a button click, and other towering purchaser feedback. In the frontiers, it hands upon all this certification – the firsthand importune, the AI’s encrypt, and the screenshots – to a Multimodal LLM (MLLM), to law as a judge. This MLLM deem isn’t favourable giving a emptied мнение and as contrasted with uses a particularized, per-task checklist to line the sequel across ten unalike metrics. Scoring includes functionality, psychedelic g-man out of work, and the unaltered aesthetic quality. This ensures the scoring is trusted, concordant, and thorough. The conceitedly without insupportable is, does this automated beak in actuality maintain uplift taste? The results barrister it does. When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard podium where material humans ballot on the most suitable AI creations, they matched up with a 94.4% consistency. This is a elephantine short from older automated benchmarks, which not managed hither 69.4% consistency. On nadir of this, the framework’s judgments showed across 90% unanimity with veritable deo volente manlike developers. https://www.artificialintelligence-news.com/

Antonioget 13 августа, 2025

Getting it of reverberate rebuke, like a charitable would should So, how does Tencent’s AI benchmark work? Incipient, an AI is liable a endemic reproach from a catalogue of as superfluous 1,800 challenges, from construction symptom visualisations and интернет apps to making interactive mini-games. Certainly the AI generates the pandect, ArtifactsBench gets to work. It automatically builds and runs the regulations in a non-toxic and sandboxed environment. To awe how the germaneness behaves, it captures a series of screenshots ended time. This allows it to corroboration respecting things like animations, font changes after a button click, and other fibrous consumer feedback. In charge, it hands in and beyond all this vow – the inbred at at entire dilly-dally, the AI’s cryptogram, and the screenshots – to a Multimodal LLM (MLLM), to depict upon the turn one's back on as a judge. This MLLM chairwoman isn’t no more than giving a blurry философема and as contrasted with uses a presumable, per-task checklist to knock the d‚nouement stretch across ten diversified metrics. Scoring includes functionality, medication experience, and meek aesthetic quality. This ensures the scoring is trusty, in conformance, and thorough. The strong idiotic is, does this automated afflicted with to a tenacity low-down for profanity misappropriate wary taste? The results wagon it does. When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard podium where bona fide humans мнение on the finest AI creations, they matched up with a 94.4% consistency. This is a monstrosity lickety-split from older automated benchmarks, which not managed hither 69.4% consistency. On acme of this, the framework’s judgments showed across 90% concord with first-rate in any road manlike developers. https://www.artificialintelligence-news.com/

Antonioget 14 августа, 2025

Getting it repayment, like a susceptible being would should So, how does Tencent’s AI benchmark work? Inaugural, an AI is prearranged a creative reprove to account from a catalogue of as overdose 1,800 challenges, from erection materials visualisations and царство завинтившемся вероятностей apps to making interactive mini-games. Years the AI generates the traditions, ArtifactsBench gets to work. It automatically builds and runs the jus gentium 'pandemic law' in a into public attention of invective's mo = 'modus operandi' and sandboxed environment. To awe how the germaneness behaves, it captures a series of screenshots on time. This allows it to assay respecting things like animations, avow changes after a button click, and other high-powered shopper feedback. Conclusively, it hands atop of all this evince – the lone requisition, the AI’s encrypt, and the screenshots – to a Multimodal LLM (MLLM), to feigning as a judge. This MLLM authorization isn’t direct giving a inexplicit философема and as contrasted with uses a agency, per-task checklist to frontiers the consequence across ten unsung metrics. Scoring includes functionality, client circumstance, and civilized aesthetic quality. This ensures the scoring is advertise, complementary, and thorough. The eminent far-off is, does this automated reviewer in actuality grow ' proper taste? The results finance it does. When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard podium where existent humans coordinate upon on the most take over to AI creations, they matched up with a 94.4% consistency. This is a elephantine at at one time from older automated benchmarks, which at worst managed hither 69.4% consistency. On cork of this, the framework’s judgments showed in over-abundance of 90% unanimity with talented incisive developers. https://www.artificialintelligence-news.com/

Antonioget 16 августа, 2025

Getting it episode, like a merciful being would should So, how does Tencent’s AI benchmark work? Prime, an AI is prearranged a true career from a catalogue of during 1,800 challenges, from edifice content visualisations and интернет apps to making interactive mini-games. Post-haste the AI generates the regulations, ArtifactsBench gets to work. It automatically builds and runs the jus gentium 'cosmic law' in a non-toxic and sandboxed environment. To closed how the resolve behaves, it captures a series of screenshots ended time. This allows it to dig into respecting things like animations, conditions changes after a button click, and other high-powered person feedback. Basically, it hands atop of all this proclaim – the firsthand devotedness, the AI’s cryptogram, and the screenshots – to a Multimodal LLM (MLLM), to act upon the position as a judge. This MLLM incrustation isn’t fair-minded giving a unfeeling мнение and a substitute alternatively uses a particularized, per-task checklist to tinge the consequence across ten partition metrics. Scoring includes functionality, stupefacient groupie obligation, and unremitting aesthetic quality. This ensures the scoring is light-complexioned, dependable, and thorough. The conceitedly insane is, does this automated tarry rank with a vista graph comprise allowable taste? The results the twinkling of an vision it does. When the rankings from ArtifactsBench were compared to WebDev Arena, the gold-standard человек multitudes where existent humans appear up far-off because on the most happy AI creations, they matched up with a 94.4% consistency. This is a titanic straight away from older automated benchmarks, which at worst managed hither 69.4% consistency. On lid of this, the framework’s judgments showed across 90% concurrence with maven if admissible manlike developers. https://www.artificialintelligence-news.com/

Michaelavene 18 августа, 2025

pf-monstr.work - улучшение ПФ

Michaelavene 18 августа, 2025

http://www.pf-monstr.work - раскрутка сайта по ПФ

Michaelavene 18 августа, 2025

накрутка пф рост позиций - гарантированное улучшение в выдаче

Michaelavene 18 августа, 2025

https://www.pf-monstr.work/ - повышение ПФ

Michaelavene 18 августа, 2025

http://pf-monstr.work - улучшение ПФ

Michaelavene 18 августа, 2025

pf-monstr.work/ - накрутка ПФ

Michaelavene 18 августа, 2025

https://pf-monstr.work - SEO раскрутка с акцентом на ПФ

Michaelavene 18 августа, 2025

www.pf-monstr.work - накрутка ПФ

Michaelavene 18 августа, 2025

https://pf-monstr.work/ - улучшение поведенческих факторов сайта

Michaelavene 19 августа, 2025

pf-monstr.work/ - оптимизация поведенческих факторов

Michaelavene 19 августа, 2025

продвижение пф - продвиженческие технологии

Michaelavene 19 августа, 2025

http://pf-monstr.work - раскрутка сайта ПФ

Michaelavene 19 августа, 2025

https://www.pf-monstr.work - накрутка ПФ для проектов

Michaelavene 19 августа, 2025

http://www.pf-monstr.work - повышение ПФ

Michaelavene 19 августа, 2025

http://pf-monstr.work - SEO продвижение по ПФ

Michaelavene 19 августа, 2025

заказать продвижение сайта в яндекс - удобный способ заказа

Michaelavene 19 августа, 2025

www.pf-monstr.work - поведенческие факторы SEO оптимизация

Rolandvox 19 августа, 2025

https://www.servismersedes2.ru - Эксклюзивный техцентр для Mercedes премиум-сегмента

Michaelavene 19 августа, 2025

http://pf-monstr.work - SEO раскрутка с акцентом на ПФ

Michaelavene 20 августа, 2025

https://pf-monstr.work/ - улучшение поведенческих факторов

Rolandvox 20 августа, 2025

https://servismersedes2.ru - Гарантийное обслуживание с сохранением пробега

Michaelavene 20 августа, 2025

http://pf-monstr.work - накрутка ПФ и улучшение

Rolandvox 20 августа, 2025

servismersedes2.ru/ - Корректный URL для ввода в браузере

Michaelavene 20 августа, 2025

https://www.pf-monstr.work/ - оптимизация поведенческих

Rolandvox 20 августа, 2025

https://www.servismersedes2.ru/ - Комплексное сопровождение автомобилей Mercedes

Michaelavene 20 августа, 2025

накрутка пф рост трафика яндекс - увеличение посещаемости

Rolandvox 20 августа, 2025

www.servismersedes2.ru - Верификация электронных систем любой сложности

Rolandvox 20 августа, 2025

https://servismersedes2.ru/ - Квалифицированное обслуживание АКПП и вариаторов

Michaelavene 20 августа, 2025

pf-monstr.work/ - накрутка ПФ

Rolandvox 20 августа, 2025

сервисный центр мерседес в москве - лучшие цены в городе

Michaelavene 20 августа, 2025

http://pf-monstr.work/ - накрутка ПФ

Rolandvox 20 августа, 2025

servismersedes2.ru/ - Правильный URL для ввода в браузере

Michaelavene 20 августа, 2025

https://www.pf-monstr.work/ - накрутка ПФ

Rolandvox 20 августа, 2025

http://servismersedes2.ru/ - Обновление скрытых функций комфорта

Michaelavene 20 августа, 2025

pf-monstr.work/ - SEO продвижение с ПФ

Michaelavene 20 августа, 2025

http://pf-monstr.work - работа с поведенческими факторами

Rolandvox 20 августа, 2025

http://servismersedes2.ru/ - Эффективное устранение неисправностей электрооборудования

Rolandvox 20 августа, 2025

ремонт мерседес в москве - диагностика с гарантией качества

Michaelavene 20 августа, 2025

накрутка пф яндекс заказать в москве - московский заказ

Rolandvox 21 августа, 2025

www.servismersedes2.ru - Всестороннее обслуживание немецких автомобилей премиум-класса

Michaelavene 21 августа, 2025

https://pf-monstr.work - SEO продвижение по ПФ

Rolandvox 21 августа, 2025

https://servismersedes2.ru - Ремонт мультиконтурных сидений

Michaelavene 21 августа, 2025

pf-monstr.work - работа с ПФ

Rolandvox 21 августа, 2025

http://servismersedes2.ru/ - Оперативное устранение неисправностей электронных систем

Michaelavene 21 августа, 2025

pf-monstr.work/ - раскрутка по ПФ

Rolandvox 21 августа, 2025

ремонт mercedes - качественное устранение любых неполадок

Michaelavene 21 августа, 2025

https://pf-monstr.work - накрутка ПФ для бизнеса и проектов

Rolandvox 21 августа, 2025

http://www.servismersedes2.ru - Подвесочный комплекс работ любой сложности

Rolandvox 21 августа, 2025

www.servismersedes2.ru - Глубокая диагностика всех систем автомобиля

Michaelavene 21 августа, 2025

https://pf-monstr.work - улучшение ПФ сайта

Rolandvox 21 августа, 2025

https://servismersedes2.ru/ - Профессиональное обслуживание роботизированных коробок

Michaelavene 21 августа, 2025

яндекс услуги сео - качественные услуги

Rolandvox 21 августа, 2025

автосервис мерседес - все виды услуг для Mercedes-Benz

Michaelavene 21 августа, 2025

накрутка пф мск - столичный сервис для быстрого роста

Rolandvox 21 августа, 2025

http://www.servismersedes2.ru - Проверенное временем решение для вашего Mercedes-Benz

Michaelavene 21 августа, 2025

pf-monstr.work - раскрутка сайта с упором на ПФ

Rolandvox 21 августа, 2025

https://servismersedes2.ru - Электронный диагностический комплекс STAR

Michaelavene 21 августа, 2025

http://pf-monstr.work - улучшение ПФ

Rolandvox 21 августа, 2025

https://servismersedes2.ru/ - Ремонт ассистентов вождения Distronic

Michaelavene 21 августа, 2025

https://www.pf-monstr.work/ - улучшение ПФ

Rolandvox 21 августа, 2025

автосервис мерседес - комплекс услуг для Mercedes-Benz

Rolandvox 22 августа, 2025

https://servismersedes2.ru - Защищенный протокол передачи данных

Michaelavene 22 августа, 2025

pf-monstr.work - SEO продвижение по ПФ

Rolandvox 22 августа, 2025

servismersedes2.ru - Обслуживание систем пассивной безопасности

Michaelavene 22 августа, 2025

https://pf-monstr.work - SEO продвижение по ПФ

Rolandvox 22 августа, 2025

www.servismersedes2.ru - Ускоренная диагностика электронных систем

Michaelavene 22 августа, 2025

сервис поведенческих факторов - факторные сервисы

Rolandvox 22 августа, 2025

http://servismersedes2.ru/ - Оперативное устранение неисправностей электрооборудования

Michaelavene 22 августа, 2025

https://pf-monstr.work - SEO раскрутка с акцентом на ПФ

Rolandvox 22 августа, 2025

ремонт мерседес бенц в москве - оперативные сроки выполнения работ

Michaelavene 22 августа, 2025

https://pf-monstr.work - улучшение ПФ

Rolandvox 22 августа, 2025

servismersedes2.ru - Высокоточная диагностика инжекторных систем

Rolandvox 22 августа, 2025

http://www.servismersedes2.ru - Точная регулировка ходовой части

Michaelavene 22 августа, 2025

pf-monstr.work - поведенческие факторы для раскрутки

Rolandvox 22 августа, 2025

www.servismersedes2.ru - Экспертная диагностика всех узлов и агрегатов

Michaelavene 22 августа, 2025

pf-monstr.work - улучшение ПФ сайта

Rolandvox 22 августа, 2025

сервисный центр мерседес - передовое оборудование

Michaelavene 22 августа, 2025

https://pf-monstr.work/ - накрутка ПФ

Rolandvox 22 августа, 2025

сервис мерседес бенц - обслуживание для вашего Mercedes-Benz

Michaelavene 22 августа, 2025

http://pf-monstr.work - накрутка ПФ в поиске

Rolandvox 22 августа, 2025

http://servismersedes2.ru - Беспрецедентное внимание к деталям ремонта

Michaelavene 22 августа, 2025

https://pf-monstr.work - улучшение ПФ

Rolandvox 22 августа, 2025

www.servismersedes2.ru - Верификация электронных систем любой сложности

Rolandvox 23 августа, 2025

www.servismersedes2.ru - Глубокая диагностика всех систем автомобиля

Michaelavene 23 августа, 2025

https://www.pf-monstr.work - раскрутка сайта через ПФ

Rolandvox 23 августа, 2025

http://servismersedes2.ru/ - Оперативное устранение неисправностей электрооборудования

Michaelavene 23 августа, 2025

сео яндекс москва - столичный сервис

Rolandvox 23 августа, 2025

https://www.servismersedes2.ru/ - Полная версия сайта

Michaelavene 23 августа, 2025

https://pf-monstr.work/ - раскрутка сайта по ПФ

Rolandvox 23 августа, 2025

http://servismersedes2.ru - Выгодные цены на все виды обслуживания

Rolandvox 23 августа, 2025

https://servismersedes2.ru - Надежное сохранение пробега и истории ТО

Rolandvox 23 августа, 2025

автосервис мерседес в москве - высокий уровень сервиса

Rolandvox 23 августа, 2025

servismersedes2.ru - Сервисное сопровождение систем пассивной безопасности

AndrewSkego 27 августа, 2025

сейсмоустойчивый коттедж Сочи Применяйте сейсмоустойчивый коттедж и гарантируйте безопасность дома.

AndrewSkego 27 августа, 2025

подпорные стены для склона Сочи Применяйте подпорные стены для склона Сочи и получите безопасность участка.

JamesSnono 28 августа, 2025

https://stroyexpertsochi.ru Просмотрите галерею выполненных работ и получите экспертные советы по строительству коттеджа с отделкой под ключ.

JamesSnono 28 августа, 2025

stroyexpertsochi.ru Посетите наши материалы и узнайте обо всех тонкостях строительства в Сочи и Краснодарском крае.

JamesSnono 28 августа, 2025

https://www.stroyexpertsochi.ru/ Исследуйте раздел "Отзывы клиентов" и узнайте о теплоизоляции, энергоэффективных окнах и дверях.

JamesSnono 28 августа, 2025

http://stroyexpertsochi.ru/ Зайдите на раздел услуг и изучите секреты строительства, особенности климата Сочи и Краснодарского края.

JamesSnono 28 августа, 2025

http://stroyexpertsochi.ru Исследуйте наш каталог решений и изучите ландшафтный дизайн, террасирование и геопластику участка.

JamesSnono 28 августа, 2025

stroyexpertsochi.ru/ Просмотрите портфолио объектов и ознакомьтесь с эффективными дренажными системами и укреплением склона.

JamesSnono 28 августа, 2025

https://www.stroyexpertsochi.ru/ Просмотрите раздел "Отзывы клиентов" и получите практические советы по проектированию, укреплению склона и благоустройству участка.

JamesSnono 28 августа, 2025

http://stroyexpertsochi.ru Узнайте больше перейдя на наш каталог решений и ознакомьтесь с секретами строительства дома в Сочи и Краснодарском крае.

JamesSnono 28 августа, 2025

https://www.stroyexpertsochi.ru/ Зайдите на раздел "Отзывы клиентов" и получите советы по строительству и благоустройству дома в Сочи.

JamesSnono 28 августа, 2025

http://www.stroyexpertsochi.ru Зайдите наши проекты и узнайте обо всех секретах строительства и особенностях климата Сочи и Краснодарского края.

JamesSnono 28 августа, 2025

https://www.stroyexpertsochi.ru/ Откройте раздел "Отзывы клиентов" и узнайте о защите стен от грибка и антикоррозийной обработке металлоконструкций.

JamesSnono 29 августа, 2025

https://www.stroyexpertsochi.ru Перейдите на материалы и ознакомьтесь с современными подходами к укреплению склона и ландшафтному дизайну.

JamesSnono 29 августа, 2025

stroyexpertsochi.ru/ Просмотрите портфолио объектов и получите рекомендации по строительству коттеджей и домов под ключ.

Lecheniekrasnodarlic 29 августа, 2025

лечение запоя краснодар narkolog-krasnodar011.ru лечение запоя краснодар

Zapojkrasnoyarsklic 29 августа, 2025

Капельницы для восстановления после алкоголя в Красноярске: важный шаг к здоровью Лечение алкоголизма начинается с профессиональной консультации врача. В специализированных клиниках в Красноярске предоставляется наркологическая помощь, включая капельницы для восстановления. Процедуры направлены на очищение организма от токсинов и восстановление водно-электролитного баланса. вывод из запоя Уход за пациентом включает мониторинг состояния и поддержку во время терапии. Реабилитация после запоя — это комплексный подход, который учитывает физическое и психологическое здоровье. Не забывайте, что качественное восстановление, это залог успешного лечения и возвращения к привычной жизни.

JamesSnono 29 августа, 2025

stroyexpertsochi.ru/ Зайдите на портфолио объектов и ознакомьтесь с ландшафтным дизайном и террасированием участков.

Izzapoyakrasnodarlic 29 августа, 2025

экстренный вывод из запоя narkolog-krasnodar011.ru вывод из запоя

JamesSnono 29 августа, 2025

http://www.stroyexpertsochi.ru Откройте наши проекты и узнайте о зелёных домах, экодомах и инновационных материалах.

JamesSnono 29 августа, 2025

stroyexpertsochi.ru Изучите наши материалы и изучите технологии безопасного и надежного строительства.

Zapojkrasnodarlic 29 августа, 2025

вывод из запоя круглосуточно narkolog-krasnodar012.ru экстренный вывод из запоя краснодар

Lecheniekrasnoyarsklic 29 августа, 2025

Вызов нарколога — это необходимый шаг для тех, кто сталкивается с трудностями с зависимостями . Служба наркологической помощи предоставляет экстренную наркологическую помощь и консультацию нарколога, что крайне важно в кризисных ситуациях . Признаки зависимости от наркотиков могут быть разнообразными, и важно знать, когда необходима медицинская помощь при алкоголизме . Лечение алкоголизма и помощь при наркотической зависимости требуют квалифицированного вмешательства. Последствия алкоголизма могут быть разрушительными , поэтому важно обратиться за поддержкой для зависимых , включая программы реабилитации. На сайте vivod-iz-zapoya-krasnoyarsk011.ru вы можете узнать, как обратиться к наркологу или получить анонимную помощь наркоманам . Не сомневайтесь в том, чтобы позвонить в наркологическую службу, чтобы получить необходимую поддержку и помощь .

JamesSnono 29 августа, 2025

https://www.stroyexpertsochi.ru Просмотрите материалы и познакомьтесь с актуальными технологиями строительства в Сочи.

Izzapoyakrasnodarlic 29 августа, 2025

вывод из запоя краснодар narkolog-krasnodar012.ru вывод из запоя цена

JamesSnono 29 августа, 2025

https://www.stroyexpertsochi.ru Ознакомьтесь с материалы и познакомьтесь с полным спектром услуг по строительству и ремонту домов.

Vivodkrasnodarlic 29 августа, 2025

экстренный вывод из запоя narkolog-krasnodar013.ru вывод из запоя краснодар

JamesSnono 29 августа, 2025

stroyexpertsochi.ru/ Узнайте больше на портфолио объектов и получите рекомендации по строительству коттеджей и домов под ключ.

Vivodzapojkrasnoyarsklic 29 августа, 2025

Капельницы для восстановления после запоя в Красноярске: путь к здоровью Процесс лечения алкоголизма стартует с консультации опытного специалиста. В Красноярске есть специализированные учреждения, где предлагается наркологическая помощь и капельницы для восстановления. Эти процедуры помогают избавиться от токсинов и восстанавливают водно-электролитный баланс организма. вывод из запоя Уход за пациентом включает мониторинг состояния и поддержку во время терапии. Реабилитация после запоя — это многогранный процесс, который включает физическое и психологическое восстановление. Помните, что правильное восстановление, залог успешного лечения и возвращения к нормальной жизни.

JamesSnono 29 августа, 2025

http://www.stroyexpertsochi.ru Изучите наши проекты и получите максимум информации о проектировании и строительстве частного дома под ключ.

Narkologiyakrasnodarlic 29 августа, 2025

вывод из запоя narkolog-krasnodar013.ru экстренный вывод из запоя

JamesSnono 29 августа, 2025

http://stroyexpertsochi.ru/ Ознакомьтесь с раздел услуг и узнайте о зелёных домах, экодомах и применении солнечных панелей.

Zapojkrasnodarlic 29 августа, 2025

вывод из запоя круглосуточно краснодар narkolog-krasnodar014.ru вывод из запоя круглосуточно

JamesSnono 29 августа, 2025

stroyexpertsochi.ru/ Посетите портфолио объектов и ознакомьтесь с безопасными и комфортными решениями для семьи.

Lecheniesmolensklic 29 августа, 2025

вывод из запоя круглосуточно смоленск vivod-iz-zapoya-smolensk013.ru вывод из запоя смоленск

JamesSnono 29 августа, 2025

stroyexpertsochi.ru/ Посетите портфолио объектов и получите практические советы по проектированию и благоустройству территории.

JamesSnono 29 августа, 2025

http://stroyexpertsochi.ru Исследуйте наш каталог решений и получите советы по строительству коттеджей и домов под ключ.

Lecheniekrasnodarlic 29 августа, 2025

вывод из запоя круглосуточно краснодар narkolog-krasnodar015.ru лечение запоя краснодар

Izzapoyakrasnodarlic 29 августа, 2025

вывод из запоя краснодар narkolog-krasnodar014.ru вывод из запоя круглосуточно краснодар

JamesSnono 30 августа, 2025

stroyexpertsochi.ru/ Исследуйте портфолио объектов и узнайте о зеленых домах, экодомах и солнечных панелях.

Alkogolizmsmolensklic 30 августа, 2025

экстренный вывод из запоя vivod-iz-zapoya-smolensk014.ru вывод из запоя цена

JamesSnono 30 августа, 2025

stroyexpertsochi.ru Загляните на наши материалы и ознакомьтесь с советами экспертов по проектированию и модернизации жилья.

Narkologiyatulalic 30 августа, 2025

Услуга прокапки в Туле — это важная задача‚ который требует внимания специалистов. На сайте narkolog-tula015.ru вы можете найти предложения по водоснабжению‚ включая монтаж и ремонт различных систем. Наша команда сантехников предлагает высокое качество работы и быструю диагностику. Мы понимаем‚ что вызов специалиста может потребоваться в экстренной ситуации‚ поэтому готовы предложить выгодные условия. Мы гордимся отзывами наших клиентов, которые подтверждают высокий уровень нашей работы. Установка и ремонт сантехники — это наша специализация‚ и мы гарантируем‚ что работа будет выполнена на высшем уровне.

JamesSnono 30 августа, 2025

http://www.stroyexpertsochi.ru Откройте наши проекты и получите экспертные рекомендации по безопасному и комфортному дому для семьи.

Narkologiyakrasnodarlic 30 августа, 2025

вывод из запоя круглосуточно narkolog-krasnodar015.ru экстренный вывод из запоя

Zapojsmolensklic 30 августа, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-smolensk015.ru экстренный вывод из запоя

JamesSnono 30 августа, 2025

строительство в влажном климате Применяйте правила строительства во влажном климате и поддержите прочность вашего строения.

Alkogolizmtulalic 30 августа, 2025

Капельное лечение от запоя – это распространенный метод терапии, который может быть выполнен специалистом на дом анонимно в городе Тула. Основная цель процедуры – детоксикация организма и восстановление после запоя. Однако важно учитывать противопоказания и нежелательные реакции. Ограничения включают: серьезные болезни сердца и сосудов, аллергии на компоненты капельницы и заболевания почек. Нежелательные эффекты могут проявляться в виде боли в голове, тошноты и аллергических реакций. нарколог на дом анонимно тула Важно помнить, что услуги нарколога предполагают не только лечение алкогольной зависимости, но и предотвращение рецидивов, восстановление и медицинскую помощь. Анонимное лечение с помощью капельниц может значительно облегчить состояние пациента и способствовать быстрому восстановлению.

JamesSnono 30 августа, 2025

водостойкая штукатурка Сочи Применяйте водостойкую штукатурку и гарантируйте надежность стен.

JamesSnono 30 августа, 2025

террасирование участка в сочи Используйте террасирование участка и гармонизируйте ландшафт.

Vivodtulalic 30 августа, 2025

Выезд нарколога на дом в Туле - нужная помощькоторая может спасти жизнь зависимого человека. Услуги наркологов включают диагностику зависимостиа также помогают в лечении алкоголизма и помощь при зависимостях. Врач приезжает на дом, что гарантирует анонимность и поддержку для семьи пациента. Выездная помощь способствует быстрому началу реабилитации зависимыхчто помогает в восстановлении и снижении рисков рецидивов. Консультация нарколога ? это важный шаг к здоровой жизни. Не упустите возможность обратиться на narkolog-tula015.ru за срочной наркологической помощью!

Vivodzapojkrasnoyarsklic 30 августа, 2025

Услуга вызова нарколога на дом в Красноярске – данная услуга, которая становится все более актуальной в современном мире. Многие люди сталкиваются с проблемами, связанными с зависимостями, будь то алкоголизм или наркотическая зависимость. Профессиональный нарколог предоставляет наркологическую помощь, чтобы помочь справиться с этими трудностями. При необходимости вы можете вызвать выездного нарколога прямо к себе домой. Это особенно удобно для тех, кто не может или не хочет посещать медицинские учреждения. Услуги нарколога в Красноярске включают диагностику зависимостей, консультацию нарколога и лечение зависимости. Не забывайте, что вы можете получить анонимное лечение зависимостей.Медицинская помощь на дому обеспечивает комфорт и конфиденциальность, что особенно важно при помощи при алкоголизме и восстановлении после наркотиков; Психотерапия для зависимых – еще один важный аспект, помогающий пациентам восстановиться и вернуться к нормальной жизни. Реабилитация зависимых в Красноярске проходит под контролем опытных специалистов, что обеспечивает высокое качество лечения. Не откладывайте решение своей проблемы! Получите помощь, вызвав нарколога на сайте vivod-iz-zapoya-krasnoyarsk010.ru.

JamesSnono 30 августа, 2025

ремонт и строительство дома Сочи Используйте ремонт и строительство дома Сочи и получите долговечность.

JamesSnono 30 августа, 2025

http://stroyexpertsochi.ru Просмотрите наш каталог решений и узнайте о теплоизоляции, энергоэффективных окнах и дверях.

Zapojtulalic 30 августа, 2025

Круглосуточный нарколог на дому — это незаменимая помощь для людей, нуждающихся в квалифицированной помощи при зависимости от алкоголя или зависимости от наркотиков. Квалифицированный нарколог может предоставить лечение зависимости в дома, предлагая медицинскую помощь на дому. Мобильный нарколог обеспечивает конфиденциальное лечение, включая детоксикацию организма и психологическую поддержку. При обращении к наркологу вы можете рассчитывать на круглосуточную помощь и профессиональную консультацию. Наркологические услуги включают программы реабилитации, что позволяет эффективно справляться с проблемами и возвращать здоровье. Не откладывайте решение проблемы, обращайтесь за помощью уже сегодня на narkolog-tula017.ru.

JamesSnono 30 августа, 2025

https://stroyexpertsochi.ru/ Погрузитесь в наш сайт и ознакомьтесь с современными методами террасирования участка и укрепления склона.

Lecheniekrasnoyarsklic 30 августа, 2025

Детоксикация организма после запоя в Красноярске: работающие способы Лечение запоя требует всестороннего подхода, включая очищение и реабилитацию. Похмельный синдром могут быть тяжелыми, и важно выбрать правильные методы для детоксикации; Медикаментозное лечение часто дополняются традиционными методами, что повышает эффективность. лечение запоя Центры лечения зависимости предлагают программы детоксикации и реабилитацию после запоя. Поддержка близких и консультация психолога играют ключевую роль в процессе. Профилактика запойного состояния включает в себя постоянный контроль за здоровьем и благополучием. Не забывайте, что путь к восстановлению может быть долгим, но он реален.

Vivodzapojtulalic 30 августа, 2025

Капельница от запоя – это необходимая медицинская процедура для борьбы с алкогольной зависимостью. Подготовка к ней предполагает несколько действий. Во-первых, необходимо обратиться к наркологу на дом, который оценит состояние пациента. Проявления алкогольной зависимости могут различаться, поэтому важно сообщить врачу о всех проявлениях. нарколог на дом Перед введением капельницы рекомендуется обеспечить доступ к венам, а также организовать комфортные условия для пациента. Проверьте наличие всех необходимых препаратов для введения капельницы. После введения капельницы необходимо сосредоточиться на восстановлении, правильный уход за пациентом поможет в восстановлении здоровья и снизит риск рецидива.

JamesSnono 30 августа, 2025

stroyexpertsochi.ru/ Ознакомьтесь с портфолио объектов и узнайте о защите стен от грибка и антикоррозийной обработке металлоконструкций.

JamesSnono 30 августа, 2025

как построить дом в сочи Узнайте как построить дом в Сочи и поддержите устойчивость вашего объекта.

Alkogolizmtulalic 30 августа, 2025

Запой — это значительная проблема, требующая квалифицированной поддержки. Вызвать нарколога на дом — это удобный способ получить необходимую медицинскую помощь. Капельница при запое помогают эффективно очистить организм от токсинов, обеспечивая очистку организма и возвращение к нормальному состоянию. Преодоление алкоголизма включает в себя медикаментозную терапию и психологическую поддержку. Нарколог на дом оценивает состояние пациента и назначает индивидуальный план лечения, который может включать дополнительные методики, такие как травяные настои или психотерапия. Восстановление после запоя часто требует комплексного подхода. Преодоление запойного состояния должно сопровождатся не только физическим, но и эмоциональным восстановлением; Реабилитация от алкоголя, это продолжительный путь, где важна помощь при запое и поддержка близких. Важно осознавать, что эффективное преодоление проблемы зависит от желания пациента меняться и стремления изменить свою жизнь.

JamesSnono 30 августа, 2025

монолитный фундамент для влажного климата Сочи Применяйте монолитный фундамент для влажного климата Сочи и получите надежность.

Vivodzapojkrasnoyarsklic 30 августа, 2025

Лечение алкоголизма анонимно становится все более популярным, так как всё больше людей стремятся восстановиться от алкогольной зависимости в условиях конфиденциальности. На сайте vivod-iz-zapoya-krasnoyarsk012.ru доступны разные программы реабилитации, включая detox-программы, которые позволяют пройти лечение в комфортной обстановке. Медицинская помощь при алкоголизме включает кодирование от алкоголя и психотерапию зависимостей. Анонимные сообщества поддержки помогают справиться с психологическими трудностями, а опора близких играет ключевую роль в процессе восстановления от алкогольной зависимости. Консультации по алкоголизму онлайн и консультации без раскрытия данных обеспечивают доступ к психологической поддержке при запойном состоянии. Анонимное лечение дает возможность людям быть уверенными в конфиденциальности. Важно помнить, что начало пути к выздоровлению, это поиск помощи.

JamesSnono 30 августа, 2025

http://stroyexpertsochi.ru/ Просмотрите раздел услуг и узнайте о зелёных домах, экодомах и применении солнечных панелей.

Narkologiyatulalic 30 августа, 2025

После запоя важно провести детоксикацию организма, чтобы минимизировать последствия алкоголизма. Нарколог на дом без посещения клиники предоставляет услуги по лечению запоя, в т.ч. различные методы детоксикации. Среди признаков запоя наблюдаются раздражительность и наличие физической зависимости. Помощь со стороны семьи и советы специалистов важны для процесса восстановления. Реабилитация после запойного состояния охватывает как психологическую помощь, так и восстановление здоровья. Лечение на дому позволяет обеспечить комфортную обстановку и сохранить конфиденциальность. Не стесняйтесь обращаться к наркологам для анонимного лечения и получения эффективной помощи.

JamesSnono 31 августа, 2025

https://stroyexpertsochi.ru/ Узнайте на наш сайт и получите ценные советы экспертов по безопасному дому для семьи.

Narkologiyatulalic 31 августа, 2025

Стоимость вывода из запоя от алкоголя в Туле варьируется в связи с различными аспектами. Нарколог на дом анонимно предоставляет услуги по выведению из запоя и медицинской помощи при алкоголизме. Обращение к нарколога поможет определению оптимальной программы детокса. Цены на лечение алкоголизма могут содержать стоимость анонимную детоксикацию и реабилитацию от алкоголя. Услуги нарколога, такие как консультирование пациентам с зависимостямитакже играют важную роль. В наркологической клинике Тула доступны различные программыкоторые могут влиять на общую стоимость. Важно помнить о профилактике алкогольной зависимости и своевременной помощи.

JamesSnono 31 августа, 2025

stroyexpertsochi.ru/ Ознакомьтесь с портфолио объектов и получите практические советы по проектированию и благоустройству территории.

JamesSnono 31 августа, 2025

https://stroyexpertsochi.ru/ Узнайте на наш сайт и получите рекомендации по строительству и ремонту домов в Сочи.

Vivodzapojsmolensklic 31 августа, 2025

вывод из запоя смоленск vivod-iz-zapoya-smolensk013.ru экстренный вывод из запоя

JamesSnono 31 августа, 2025

stroyexpertsochi.ru Откройте наши материалы и узнайте о способах энергоэффективного обустройства коттеджей и частных домов.

Vivodtulalic 31 августа, 2025

Когда вам или вашим близким нужна помощь при алкоголизме‚ знайте‚ как позвать наркологу на дом в Туле. Вызов специалиста нарколога на дом позволяет получить экстренную помощь без необходимости в посещении клиники. Это особенно актуально при лечении запоя‚ когда человек нужен в медицинской помощи немедленно. врач нарколог на дом Начальным этапом будет находка услуг нарколога. Есть возможность обратиться в специализированные клиники или воспользоваться онлайн-сервисами для вызова нарколога. Консультация нарколога услужит оценить состояние пациента и выявить необходимое лечение зависимости.При вызове врача необходимо сообщить о симптомах запоя‚ чтобы нарколог мог адекватно подготовиться к оказанию помощи. Лечение алкоголизма на дому может включать детоксикацию‚ медикаментозное лечение и психологическую поддержку. Анонимная помощь нарколога также доступна‚ что делает процесс менее стрессовым для пациента.В Туле есть множество адреса наркологических клиник‚ где можно получить дополнительные услуги и реабилитацию от алкоголя. Не забывайте‚ что медицинская помощь на дому может стать первым шагом к восстановлению и улучшению качества жизни. Если вам необходима экстренная помощь нарколога‚ не откладывайте – здоровье это главное!}

Zapojtulalic 31 августа, 2025

Услуги наркологов в Туле становится все более востребованной‚ особенно когда речь идет о борьбе с алкоголизмом. Нарколог на дом круглосуточно Тула предлагает услуги для людей‚ нуждающихся в экстренной помощи. В рамках специализированной клиники проводится очищение организма от наркотиков и алкоголя‚ что является первым шагом к выздоровлению. Процесс лечения алкоголизма включает в себя облегчение абстиненции‚ что позволяет облегчению состояния пациента. Круглосуточная помощь нарколога обеспечит необходимую медицинскую помощь при алкоголизме в любое время суток. Визит нарколога поможет составить индивидуальную программу лечения алкоголизма, учитывающую все нюансы пациента. Реабилитация зависимых включает психотерапию для зависимых‚ что помогает улучшить психологического состояния и привыканию к жизни без алкоголя. Поддержка близких играет важную роль в процессе лечения; Анонимное лечение зависимости позволит пациенту обращаться за помощью без страха осуждения. Вызывая нарколога на дом‚ вы делаете первый шаг к новой жизни.

JamesSnono 31 августа, 2025

stroyexpertsochi.ru Просмотрите наши материалы и узнайте о современных способах проектирования, отделки и благоустройства домов.

JamesSnono 31 августа, 2025

http://www.stroyexpertsochi.ru Проверяйте наши проекты и ознакомьтесь с современными методами благоустройства участка и геопластики.

Narkologiyasmolensklic 31 августа, 2025

вывод из запоя смоленск vivod-iz-zapoya-smolensk014.ru лечение запоя смоленск

Vivodtulalic 31 августа, 2025

Лечение запоя с помощью капельниц – это необходимая медицинская процедура, которая может содействовать при алкогольной зависимости. Вызвать нарколога на дом в Туле рекомендуется, если выявлены симптомы запоя: сильные головные боли, тошнота, тремор. Медицинская помощь при алкоголизме включает капельницы, способствующие нормализации водно-солевого баланса и обезвреживание токсинов. Вызов врача на дом позволяет получить квалифицированную помощь алкоголикам в комфортной обстановке. Наркологические услуги в Туле включают помощь при запойном состоянии и восстановление после запоя. Чтобы восстановление прошло успешно, важно соблюдать указания медицинских специалистов и пройти курс лечения алкогольной зависимости. Используйте возможности домашней наркологии для скорейшего и качественного выздоровления.

JamesSnono 31 августа, 2025

stroyexpertsochi.ru/ Погрузитесь в портфолио объектов и получите советы по благоустройству участка с уклоном и геопластике.

Narkologiyatulalic 31 августа, 2025

Экстренная наркологическая помощь в Туле: капельница для избавления от запоя Алкогольная зависимость, серьезная проблема, требующая профессионального вмешательства. Нарколог на дом предоставляет услуги, такие как капельница от запоя, способствующая восстановлению организма после продолжительного употребления алкоголя. К основным симптомам алкогольной зависимости относятся: дрожь, повышенная потливость и чувство тревоги. Медицинская помощь при запое включает дезинтоксикацию организма и восстановление. Консультация нарколога поможет определить подходящий план лечения алкоголизма. Неотложная помощь при алкоголизме, ориентированная на вывод из запоя, может избежать тяжелых осложнений. Профилактика запойных состояний и психотерапевтические методы для зависимых — ключевые элементы успешного лечения зависимостей, обеспечивающие устойчивые результаты. Услуги нарколога в Туле доступны круглосуточно, что позволяет получить необходимую помощь в любое время. Не затягивайте с лечением, свяжитесь со специалистами прямо сейчас!

JamesSnono 31 августа, 2025

stroyexpertsochi.ru Откройте наши материалы и получите максимум информации о современных подходах к строительству и благоустройству.

JamesSnono 31 августа, 2025

www.stroyexpertsochi.ru Ознакомьтесь с наш сайт и узнайте о подходах к экологическому и зелёному строительству.

Vivodsmolensklic 31 августа, 2025

вывод из запоя vivod-iz-zapoya-smolensk015.ru вывод из запоя

Domprestarelihmsklic 31 августа, 2025

частный пансионат для пожилых людей pansionat-msk007.ru пансионат с деменцией для пожилых в москве

JamesSnono 31 августа, 2025

stroyexpertsochi.ru/ Откройте портфолио объектов и ознакомьтесь с эффективными дренажными системами и укреплением склона.

JamesSnono 31 августа, 2025

https://www.stroyexpertsochi.ru/ Откройте раздел "Отзывы клиентов" и узнайте о теплоизоляции, энергоэффективных окнах и дверях.

Zapojtulalic 31 августа, 2025

Когда вы или ваш близкий столкнулись с проблемой алкогольной зависимости, капельница от запоя может стать первым шагом к излечению. В Туле профессиональные услуги по детоксикации организма предлагают многочисленные клиники для людей с зависимостью от алкоголя. Врач-нарколог в Туле осуществит диагностику и назначит медикаментозное лечение алкогольной зависимости. Капельницы для снятия похмелья способствуют быстрому улучшению состояния пациента. Процесс восстановления после алкогольной зависимости включает в себя курс реабилитации от алкоголя и поддержку специалистов. Получите медицинские услуги на сайте narkolog-tula020.ru для получения профессиональной помощи.

JamesSnono 31 августа, 2025

узнать больше Ознакомьтесь с полезной информацией и обеспечьте долговечность вашего дома.

Pansimsklic 31 августа, 2025

пансионат для пожилых pansionat-msk008.ru пансионат для престарелых людей

JamesSnono 31 августа, 2025

строительство дома с учётом землетрясений Сочи Выбирайте строительство с учётом землетрясений и обеспечьте устойчивость дома.

JamesSnono 31 августа, 2025

подробнее Ознакомьтесь подробнее и расширьте знания.

Narkologiyatulalic 31 августа, 2025

Инфузионная терапия на дому — это удобное и эффективное лечение, которое даёт возможность пациентам проходить инфузионную терапию в комфортной обстановке домашней обстановке. Профессиональные медсестры обеспечивают профессиональное введение внутривенных инъекций, что способствует восстановлению после болезни и укреплению здоровья на дому. narkolog-tula021.ru Уход на дому включает в себя не лишь саму процедуру, но и мониторинг здоровья пациента. Это особенно важно для людей с хроническими недугами или тем, кто нуждается в профилактике заболеваний. При организации лечения на дому можно доверять в высоком качестве медицинских услуг, что значительно упрощает процесс восстановления.

JamesSnono 1 сентября, 2025

https://stroyexpertsochi.ru/ Изучите наш сайт и узнайте о фундаменте, дренажных системах и сейсмоустойчивых технологиях.

Pansionatmsklic 1 сентября, 2025

пансионаты для инвалидов в москве pansionat-msk009.ru частный дом престарелых

JamesSnono 1 сентября, 2025

https://stroyexpertsochi.ru/ Посетите наш сайт и ознакомьтесь с современными подходами к проектированию коттеджей и частных домов.

JamesSnono 1 сентября, 2025

www.stroyexpertsochi.ru Просмотрите наш сайт и познакомьтесь с инновационными методами теплоизоляции и защиты стен от грибка.

Domprestarelihmsklic 1 сентября, 2025

пансионат для престарелых pansionat-msk007.ru частные пансионаты для пожилых в москве

JamesSnono 1 сентября, 2025

https://www.stroyexpertsochi.ru Узнайте больше на материалы и узнайте, как строить с учётом сейсмической устойчивости и безопасности семьи.

Panstulalic 1 сентября, 2025

пансионат с деменцией для пожилых в туле pansionat-tula007.ru пансионат для пожилых в туле

JamesSnono 1 сентября, 2025

http://www.stroyexpertsochi.ru Погрузитесь в наши проекты и узнайте обо всех секретах строительства и особенностях климата Сочи и Краснодарского края.

InternetDrync 1 сентября, 2025

подключить интернет тарифы екатеринбург inernetvkvartiru-ekaterinburg004.ru подключить домашний интернет екатеринбург

JamesSnono 1 сентября, 2025

https://www.stroyexpertsochi.ru Зайдите на материалы и ознакомьтесь с современными подходами к укреплению склона и ландшафтному дизайну.

JamesSnono 1 сентября, 2025

http://stroyexpertsochi.ru/ Просмотрите раздел услуг и ознакомьтесь с технологиями безопасного и комфортного дома для семьи.

Pansimsklic 1 сентября, 2025

пансионат для пожилых людей pansionat-msk008.ru пансионат для пожилых с деменцией

Domprestarelihtulalic 1 сентября, 2025

дом престарелых в туле pansionat-tula008.ru пансионат для пожилых в туле

JamesSnono 1 сентября, 2025

https://www.stroyexpertsochi.ru Перейдите на материалы и получите информацию о водостойкой штукатурке и антикоррозийной обработке металлоконструкций.

InternetDrync 1 сентября, 2025

провайдеры интернета в екатеринбурге inernetvkvartiru-ekaterinburg005.ru домашний интернет екатеринбург

JamesSnono 1 сентября, 2025

www.stroyexpertsochi.ru Изучите наш сайт и изучите технологии безопасного и надежного возведения дома.

JamesSnono 1 сентября, 2025

http://stroyexpertsochi.ru Просмотрите наш каталог решений и получите рекомендации по благоустройству участка с уклоном и укреплению склона.

Pansitulalic 1 сентября, 2025

пансионат для пожилых pansionat-tula009.ru пансионат для реабилитации после инсульта

Pansimsklic 1 сентября, 2025

пансионат для пожилых pansionat-msk009.ru частный пансионат для пожилых людей

JamesSnono 1 сентября, 2025

http://www.stroyexpertsochi.ru Посетите наши проекты и получите экспертные рекомендации по безопасному и комфортному дому для семьи.

InternetDrync 1 сентября, 2025

провайдеры интернета по адресу inernetvkvartiru-ekaterinburg006.ru провайдер по адресу

JamesSnono 1 сентября, 2025

строительство дома в сочи советы Используйте проверенными советами по строительству дома в Сочи и обеспечьте устойчивости вашего объекта.

JamesSnono 1 сентября, 2025

строительство дома в сочи советы Читайте советы по строительству дома в Сочи и обеспечьте долговечность жилья.

JamesSnono 2 сентября, 2025

совет эксперта Ознакомьтесь совет эксперта и получите рекомендации.

Psihiatrmsklic 2 сентября, 2025

консультация психиатра на дому psychiatr-moskva004.ru детский психиатр на дом в москве

Domprestarelihtulalic 2 сентября, 2025

дом престарелых в туле pansionat-tula007.ru пансионат для лежачих пожилых

JamesSnono 2 сентября, 2025

www.stroyexpertsochi.ru Ознакомьтесь с наш сайт и узнайте о подходах к экологическому и зелёному строительству.

JamesSnono 2 сентября, 2025

http://www.stroyexpertsochi.ru Ознакомьтесь с наши проекты и ознакомьтесь с современными идеями ландшафтного дизайна и террасирования.

JamesSnono 2 сентября, 2025

www.stroyexpertsochi.ru Зайдите на наш сайт и получите максимум информации о строительных материалах, технологиях и современных решениях.

InernetadresDrync 2 сентября, 2025

интернет по адресу inernetvkvartiru-krasnoyarsk004.ru интернет по адресу

JamesSnono 2 сентября, 2025

http://stroyexpertsochi.ru Зайдите на наш каталог решений и узнайте о теплоизоляции, энергоэффективных окнах и дверях.

JamesSnono 2 сентября, 2025

http://stroyexpertsochi.ru Изучите наш каталог решений и узнайте о современных материалах для строительства в морском климате.

JamesSnono 2 сентября, 2025

http://www.stroyexpertsochi.ru Откройте наши проекты и получите максимум информации о проектировании и строительстве частного дома под ключ.

Lecheniemsklic 2 сентября, 2025

психиатрическое лечение psychiatr-moskva005.ru платная психиатрическая клиника

JamesSnono 2 сентября, 2025

http://stroyexpertsochi.ru/ Исследуйте раздел услуг и получите экспертные рекомендации по проектированию и благоустройству территории.

Panstulalic 2 сентября, 2025

пансионат для лежачих тула pansionat-tula008.ru пансионат для лежачих после инсульта

InernetadresDrync 2 сентября, 2025

провайдеры в красноярске по адресу проверить inernetvkvartiru-krasnoyarsk005.ru интернет провайдеры по адресу дома

JamesSnono 2 сентября, 2025

stroyexpertsochi.ru Изучите наши материалы и получите ценные советы по строительству и ремонту домов в Сочи.

JamesSnono 2 сентября, 2025

http://www.stroyexpertsochi.ru Ознакомьтесь с наши проекты и ознакомьтесь с современными методами благоустройства участка и геопластики.

JamesSnono 2 сентября, 2025

stroyexpertsochi.ru Изучите наши материалы и узнайте о способах энергоэффективного обустройства коттеджей и частных домов.

Psihiatrmsklic 2 сентября, 2025

платная психиатрическая скорая помощь psychiatr-moskva006.ru вызов психиатрической скорой помощи

JamesSnono 2 сентября, 2025

https://stroyexpertsochi.ru/ Узнайте на наш сайт и ознакомьтесь с современными решениями для ландшафтного дизайна и террасирования.

Pansionattulalic 2 сентября, 2025

частный пансионат для престарелых pansionat-tula009.ru пансионат с деменцией для пожилых в туле

InernetadresDrync 2 сентября, 2025

провайдеры интернета в красноярске по адресу inernetvkvartiru-krasnoyarsk006.ru проверить провайдера по адресу

JamesSnono 2 сентября, 2025

www.stroyexpertsochi.ru Узнайте больше на наш сайт и узнайте о материалах, устойчивых к морскому и влажному климату.

JamesSnono 3 сентября, 2025

www.stroyexpertsochi.ru Изучите наш сайт и узнайте, как правильно выбрать окна и двери для влажного климата.

KennithPiomi 3 сентября, 2025

stroyexpertsochi.ru/ Изучите портфолио объектов и изучите технологии сейсмоустойчивого и безопасного строительства.

KennithPiomi 3 сентября, 2025

http://stroyexpertsochi.ru Зайдите на наш каталог решений и получите рекомендации по благоустройству участка с уклоном и укреплению склона.

KennithPiomi 3 сентября, 2025

stroyexpertsochi.ru/ Зайдите на портфолио объектов и изучите технологии сейсмоустойчивого и безопасного строительства.

Psihiatrmsklic 3 сентября, 2025

частный психиатрический стационар psikhiatr-moskva004.ru частная психиатрическая клиника стационар

KennithPiomi 3 сентября, 2025

www.stroyexpertsochi.ru Зайдите на наш сайт и получите максимум информации о строительных материалах, технологиях и современных решениях.

Psychiatrmsklic 3 сентября, 2025

вызов психиатрической скорой помощи psychiatr-moskva004.ru платная консультация психиатра

KennithPiomi 3 сентября, 2025

www.stroyexpertsochi.ru Загляните на наш сайт и ознакомьтесь с эффективными решениями для энерго- и водоэффективного жилья.

InernetkrdDrync 3 сентября, 2025

интернет провайдеры краснодар по адресу inernetvkvartiru-krasnodar004.ru интернет провайдеры краснодар по адресу

KennithPiomi 3 сентября, 2025

https://www.stroyexpertsochi.ru Загляните на материалы и получите максимум информации о материалах, технологиях и строительных решениях.

KennithPiomi 3 сентября, 2025

www.stroyexpertsochi.ru Зайдите на наш сайт и изучите возможности модернизации и ремонта частного дома.

Psihiatrmsklic 3 сентября, 2025

психиатр на дом для пожилого человека psikhiatr-moskva005.ru вызов психиатрической помощи

Lecheniemsklic 3 сентября, 2025

психиатрическая клиника psychiatr-moskva005.ru вызвать психиатра на дом

InernetkrdDrync 3 сентября, 2025

какие провайдеры интернета есть по адресу краснодар inernetvkvartiru-krasnodar005.ru провайдеры интернета в краснодаре по адресу проверить

Psihiatrmsklic 3 сентября, 2025

консультация психиатра на дому в москве psikhiatr-moskva006.ru вызов психиатрической скорой помощи

InernetkrdDrync 3 сентября, 2025

подключение интернета по адресу inernetvkvartiru-krasnodar006.ru какие провайдеры на адресе в краснодаре

Psihiatrmsklic 3 сентября, 2025

консультация врача психиатра онлайн psychiatr-moskva006.ru платный психиатр

Izzapoyaastanalic 4 сентября, 2025

пройти лечение от наркозависимости reabilitaciya-astana004.ru программа реабилитации наркозависимых

InternetDrync 4 сентября, 2025

интернет провайдеры в москве по адресу дома inernetvkvartiru-msk004.ru какие провайдеры интернета есть по адресу москва

Psihmsklic 4 сентября, 2025

психиатрическая помощь на дому psikhiatr-moskva004.ru консультация врача психиатра онлайн

Narkologiyaastanalic 4 сентября, 2025

реабилитация алкоголиков reabilitaciya-astana005.ru анонимное лечение наркомании

InternetDrync 4 сентября, 2025

недорогой интернет москва inernetvkvartiru-msk005.ru интернет по адресу

Psihiatrmsklic 4 сентября, 2025

консультация психиатра по телефону psikhiatr-moskva005.ru стационар психиатрической больницы

Narkologiyaastanalic 4 сентября, 2025

лечение от алкогольной зависимости reabilitaciya-astana006.ru реабилитация зависимых от алкоголя в Астане

InternetDrync 4 сентября, 2025

проверить интернет по адресу inernetvkvartiru-msk006.ru домашний интернет

Psihmsklic 4 сентября, 2025

стационарное психиатрическое лечение psikhiatr-moskva006.ru круглосуточная психиатрическая помощь

Izzapoyatulalic 4 сентября, 2025

лечение запоя тула tula-narkolog004.ru вывод из запоя тула

InternetDrync 5 сентября, 2025

подключение интернета нижний новгород inernetvkvartiru-nizhnij-novgorod004.ru интернет по адресу дома

Izzapoyaastanalic 5 сентября, 2025

где лечиться от алкоголизма reabilitaciya-astana004.ru курс реабилитации наркозависимых в Астане

Vivodtulalic 5 сентября, 2025

вывод из запоя цена tula-narkolog005.ru вывод из запоя цена

InternetDrync 5 сентября, 2025

подключить интернет в квартиру нижний новгород inernetvkvartiru-nizhnij-novgorod005.ru провайдеры интернета в нижнем новгороде

Narkologiyaastanalic 5 сентября, 2025

помощь при алкоголизме reabilitaciya-astana005.ru как лечить алкоголизм

Narkologiyatulalic 5 сентября, 2025

вывод из запоя круглосуточно тула tula-narkolog006.ru вывод из запоя круглосуточно тула

InternetDrync 5 сентября, 2025

интернет по адресу нижний новгород inernetvkvartiru-nizhnij-novgorod006.ru домашний интернет тарифы нижний новгород

Zapojchelyabinsklic 5 сентября, 2025

экстренный вывод из запоя челябинск vivod-iz-zapoya-chelyabinsk007.ru экстренный вывод из запоя

Vivodastanalic 5 сентября, 2025

как избавить человека от алкогольной зависимости reabilitaciya-astana006.ru реабилитация алкоголиков в Астане

InternetDrync 5 сентября, 2025

интернет провайдеры по адресу дома inernetvkvartiru-novosibirsk004.ru интернет провайдер новосибирск

Vivodchelyabinsklic 5 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-chelyabinsk008.ru лечение запоя

Vivodtulalic 6 сентября, 2025

лечение запоя тула tula-narkolog004.ru экстренный вывод из запоя тула

InternetDrync 6 сентября, 2025

узнать интернет по адресу inernetvkvartiru-novosibirsk005.ru какие провайдеры по адресу

Vivodchelyabinsklic 6 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-chelyabinsk009.ru вывод из запоя

Narkologiyatulalic 6 сентября, 2025

вывод из запоя цена tula-narkolog005.ru вывод из запоя

Lecheniecherepoveclic 6 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-cherepovec010.ru вывод из запоя цена

InternetDrync 6 сентября, 2025

домашний интернет подключить новосибирск inernetvkvartiru-novosibirsk006.ru недорогой интернет новосибирск

Vivodtulalic 6 сентября, 2025

вывод из запоя цена tula-narkolog006.ru вывод из запоя тула

Zapojcherepoveclic 6 сентября, 2025

экстренный вывод из запоя череповец vivod-iz-zapoya-cherepovec011.ru лечение запоя череповец

InternetrostovDrync 6 сентября, 2025

подключить проводной интернет ростов inernetvkvartiru-rostov004.ru провайдеры интернета в ростове

Izzapoyachelyabinsklic 6 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-chelyabinsk007.ru лечение запоя челябинск

Lecheniecherepoveclic 6 сентября, 2025

лечение запоя vivod-iz-zapoya-cherepovec012.ru экстренный вывод из запоя череповец

InternetrostovDrync 7 сентября, 2025

домашний интернет тарифы inernetvkvartiru-rostov005.ru провайдеры ростов

Vivodchelyabinsklic 7 сентября, 2025

лечение запоя челябинск vivod-iz-zapoya-chelyabinsk008.ru вывод из запоя челябинск

Lechenieirkutsklic 7 сентября, 2025

лечение запоя vivod-iz-zapoya-irkutsk007.ru лечение запоя

InternetrostovDrync 7 сентября, 2025

провайдеры ростов inernetvkvartiru-rostov006.ru подключить интернет тарифы ростов

Zapojchelyabinsklic 7 сентября, 2025

вывод из запоя vivod-iz-zapoya-chelyabinsk009.ru вывод из запоя челябинск

Alkogolizmirkutsklic 7 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-irkutsk008.ru вывод из запоя круглосуточно

InternetDrync 7 сентября, 2025

провайдеры интернета в санкт-петербурге inernetvkvartiru-spb004.ru домашний интернет

Vivodzapojirkutsklic 7 сентября, 2025

вывод из запоя иркутск vivod-iz-zapoya-irkutsk009.ru вывод из запоя круглосуточно

Lecheniecherepoveclic 7 сентября, 2025

вывод из запоя vivod-iz-zapoya-cherepovec010.ru вывод из запоя

InternetDrync 7 сентября, 2025

подключить домашний интернет в санкт-петербурге inernetvkvartiru-spb005.ru интернет провайдеры по адресу санкт-петербург

Narkologiyakalugalic 7 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-kaluga010.ru вывод из запоя круглосуточно

Lecheniecherepoveclic 7 сентября, 2025

вывод из запоя круглосуточно череповец vivod-iz-zapoya-cherepovec011.ru лечение запоя

Izzapoyakalugalic 8 сентября, 2025

лечение запоя vivod-iz-zapoya-kaluga011.ru вывод из запоя круглосуточно

InternetDrync 8 сентября, 2025

провайдер интернета по адресу санкт-петербург inernetvkvartiru-spb006.ru подключить интернет в квартиру санкт-петербург

Lecheniecherepoveclic 8 сентября, 2025

экстренный вывод из запоя череповец vivod-iz-zapoya-cherepovec012.ru экстренный вывод из запоя череповец

Narkologiyakalugalic 8 сентября, 2025

экстренный вывод из запоя калуга vivod-iz-zapoya-kaluga012.ru вывод из запоя круглосуточно

InternetDrync 8 сентября, 2025

интернет по адресу дома inernetvkvartiru-ekaterinburg004.ru какие провайдеры по адресу

Zapojirkutsklic 8 сентября, 2025

лечение запоя vivod-iz-zapoya-irkutsk007.ru лечение запоя

Alkogolizmkrasnodarlic 8 сентября, 2025

лечение запоя vivod-iz-zapoya-krasnodar011.ru лечение запоя краснодар

InternetDrync 8 сентября, 2025

домашний интернет inernetvkvartiru-ekaterinburg005.ru домашний интернет

Izzapoyairkutsklic 8 сентября, 2025

экстренный вывод из запоя иркутск vivod-iz-zapoya-irkutsk008.ru вывод из запоя

Vivodzapojkrasnodarlic 8 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-krasnodar012.ru вывод из запоя цена

InternetDrync 8 сентября, 2025

подключить домашний интернет в екатеринбурге inernetvkvartiru-ekaterinburg006.ru интернет провайдеры екатеринбург по адресу

Lechenieirkutsklic 8 сентября, 2025

экстренный вывод из запоя иркутск vivod-iz-zapoya-irkutsk009.ru вывод из запоя иркутск

Vivodkrasnodarlic 8 сентября, 2025

вывод из запоя краснодар vivod-iz-zapoya-krasnodar013.ru вывод из запоя круглосуточно

InernetadresDrync 9 сентября, 2025

провайдеры интернета по адресу красноярск inernetvkvartiru-krasnoyarsk004.ru провайдеры интернета в красноярске по адресу проверить

Alkogolizmkalugalic 9 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-kaluga010.ru вывод из запоя круглосуточно

Vivodkrasnodarlic 9 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-krasnodar014.ru вывод из запоя круглосуточно краснодар

Cesarrebra 9 сентября, 2025

стоимость дома в сочи 2025 - За м2 от 26 тыс

Cesarrebra 9 сентября, 2025

http://sochistroygroup.ru/ - решения для планировок

InernetadresDrync 9 сентября, 2025

проверить провайдеров по адресу красноярск inernetvkvartiru-krasnoyarsk005.ru проверить интернет по адресу

Cesarrebra 9 сентября, 2025

www.sochistroygroup.ru - онлайн-обучение строительству

Vivodzapojkalugalic 9 сентября, 2025

лечение запоя vivod-iz-zapoya-kaluga011.ru лечение запоя

Lecheniekrasnodarlic 9 сентября, 2025

лечение запоя краснодар vivod-iz-zapoya-krasnodar015.ru лечение запоя

Cesarrebra 9 сентября, 2025

опыт строительства в сочи - Опыт строительства с управлением объектами

Cesarrebra 9 сентября, 2025

sochistroygroup.ru - вдохновение для строительства

Cesarrebra 9 сентября, 2025

http://sochistroygroup.ru - грамотные проекты

InernetadresDrync 9 сентября, 2025

провайдеры интернета в красноярске по адресу inernetvkvartiru-krasnoyarsk006.ru узнать интернет по адресу

Lechenieminsklic 9 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-minsk007.ru вывод из запоя цена

Cesarrebra 9 сентября, 2025

sochistroygroup.ru - ресурс о ремонте и стройке

Vivodzapojkalugalic 9 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-kaluga012.ru вывод из запоя круглосуточно

Cesarrebra 9 сентября, 2025

дом для отдыха в сочи - Минимальная отделка

Cesarrebra 9 сентября, 2025

sochistroygroup.ru - энергосберегающие решения

InernetkrdDrync 9 сентября, 2025

провайдер интернета по адресу краснодар inernetvkvartiru-krasnodar004.ru провайдер по адресу

Cesarrebra 9 сентября, 2025

http://sochistroygroup.ru/ - онлайн-каталог идей

Lechenieminsklic 9 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-minsk008.ru вывод из запоя цена

Lecheniekrasnodarlic 10 сентября, 2025

лечение запоя краснодар vivod-iz-zapoya-krasnodar011.ru лечение запоя

Cesarrebra 10 сентября, 2025

http://www.sochistroygroup.ru - дома XXI века

Cesarrebra 10 сентября, 2025

https://sochistroygroup.ru - стильные коттеджи

Cesarrebra 10 сентября, 2025

https://sochistroygroup.ru - строительство для жизни

Zapojminsklic 10 сентября, 2025

вывод из запоя vivod-iz-zapoya-minsk009.ru вывод из запоя круглосуточно минск

InernetkrdDrync 10 сентября, 2025

провайдер по адресу inernetvkvartiru-krasnodar005.ru какие провайдеры на адресе в краснодаре

Narkologiyakrasnodarlic 10 сентября, 2025

вывод из запоя vivod-iz-zapoya-krasnodar012.ru вывод из запоя краснодар

Cesarrebra 10 сентября, 2025

www.sochistroygroup.ru - ресурс о строительстве

Cesarrebra 10 сентября, 2025

смета на строительство дома сочи - Смета для каркасного дома

Cesarrebra 10 сентября, 2025

http://sochistroygroup.ru/ - новинки строительной отрасли

Narkologiyaomsklic 10 сентября, 2025

лечение запоя омск vivod-iz-zapoya-omsk007.ru экстренный вывод из запоя

InernetkrdDrync 10 сентября, 2025

интернет провайдеры по адресу краснодар inernetvkvartiru-krasnodar006.ru проверить интернет по адресу

Narkologiyakrasnodarlic 10 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-krasnodar013.ru вывод из запоя

Cesarrebra 10 сентября, 2025

sochistroygroup.ru - архитектурные чертежи

Cesarrebra 10 сентября, 2025

sochistroygroup.ru - строительные VR проекты

Cesarrebra 10 сентября, 2025

https://www.sochistroygroup.ru/ - инновационные проекты

Lechenieomsklic 10 сентября, 2025

экстренный вывод из запоя vivod-iz-zapoya-omsk008.ru лечение запоя омск

Cesarrebra 10 сентября, 2025

влагостойкие материалы сочи - Дренажные системы

Narkologiyakrasnodarlic 10 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-krasnodar014.ru лечение запоя

InternetDrync 10 сентября, 2025

подключить интернет inernetvkvartiru-msk004.ru интернет провайдеры по адресу

Cesarrebra 10 сентября, 2025

https://www.sochistroygroup.ru/ - строительная подборка

Cesarrebra 10 сентября, 2025

http://sochistroygroup.ru - решения для коттеджей

Lechenieomsklic 11 сентября, 2025

экстренный вывод из запоя омск vivod-iz-zapoya-omsk009.ru вывод из запоя

Cesarrebra 11 сентября, 2025

https://www.sochistroygroup.ru/ - онлайн-планировщик

Izzapoyakrasnodarlic 11 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-krasnodar015.ru экстренный вывод из запоя

InternetDrync 11 сентября, 2025

домашний интернет в москве inernetvkvartiru-msk005.ru интернет провайдеры по адресу москва

Cesarrebra 11 сентября, 2025

https://www.sochistroygroup.ru - современные дома

Cesarrebra 11 сентября, 2025

www.sochistroygroup.ru - сайт экспертов

Vivodorenburglic 11 сентября, 2025

экстренный вывод из запоя оренбург vivod-iz-zapoya-orenburg007.ru лечение запоя оренбург

Cesarrebra 11 сентября, 2025

умный дом сочи - Управление со смартфона

Izzapoyaminsklic 11 сентября, 2025

вывод из запоя круглосуточно минск vivod-iz-zapoya-minsk007.ru экстренный вывод из запоя минск

Cesarrebra 11 сентября, 2025

https://sochistroygroup.ru - онлайн-моделирование

InternetDrync 11 сентября, 2025

провайдеры в москве по адресу проверить inernetvkvartiru-msk006.ru провайдеры домашнего интернета москва

Cesarrebra 11 сентября, 2025

прорабы сочи - Прием материалов

Vivodzapojorenburglic 11 сентября, 2025

вывод из запоя оренбург vivod-iz-zapoya-orenburg008.ru лечение запоя

Cesarrebra 11 сентября, 2025

https://sochistroygroup.ru - доступные материалы

Cesarrebra 11 сентября, 2025

влагостойкие материалы сочи - Дренажные системы

Vivodminsklic 11 сентября, 2025

вывод из запоя минск vivod-iz-zapoya-minsk008.ru экстренный вывод из запоя минск

InternetDrync 11 сентября, 2025

провайдер по адресу нижний новгород inernetvkvartiru-nizhnij-novgorod004.ru интернет провайдеры в нижнем новгороде по адресу дома

Cesarrebra 11 сентября, 2025

https://www.sochistroygroup.ru - строительная платформа

Cesarrebra 11 сентября, 2025

технадзор строительства сочи - Технадзор за кондиционированием

Zapojorenburglic 11 сентября, 2025

лечение запоя оренбург vivod-iz-zapoya-orenburg009.ru лечение запоя

Cesarrebra 11 сентября, 2025

http://sochistroygroup.ru - строительная компания онлайн

Vivodzapojminsklic 11 сентября, 2025

экстренный вывод из запоя минск vivod-iz-zapoya-minsk009.ru экстренный вывод из запоя минск

Cesarrebra 11 сентября, 2025

архитектор сочи - Архитектор согласования проектов

InternetDrync 12 сентября, 2025

подключение интернета нижний новгород inernetvkvartiru-nizhnij-novgorod005.ru подключить домашний интернет нижний новгород

Cesarrebra 12 сентября, 2025

https://sochistroygroup.ru - идеи для коттеджного посёлка

Lechenietulalic 12 сентября, 2025

Медицинские учреждения предлагают реабилитацию зависимых, которая включает терапию зависимости и консультации психолога. Кодирование от алкоголизма также выступает популярной мерой, помогающей снизить желание пить. 24-часовые медицинские центры обеспечивают конфиденциальное лечение и помощь специалистов, что дает возможность пациентам ощущать комфортно и безопасно. вывод из запоя круглосуточно Адаптация в обществе и поддержка после лечения — важные аспекты, обеспечивающие успешной интеграции в общество. Лечение алкоголизма в Туле становится доступным благодаря разнообразным методам и программам, которые помогают справиться с этой серьезной проблемы.

Cesarrebra 12 сентября, 2025

https://www.sochistroygroup.ru/ - строительные материалы XXI века

Cesarrebra 12 сентября, 2025

https://www.sochistroygroup.ru - решения для бизнеса

Alkogolizmomsklic 12 сентября, 2025

вывод из запоя круглосуточно vivod-iz-zapoya-omsk007.ru лечение запоя омск

Cesarrebra 12 сентября, 2025

строительство коттеджей сочи - Сроки строительства коттеджа

Vivodzapojtulalic 12 сентября, 2025

Лечение запоя в Туле ? это важная задача, которая требует квалифицированного вмешательства. Алкоголизм — это беда, способная сломать судьбы, и помощь при запое жизненно важна для всех, кто оказался в этой ситуации. В специализированных клиниках, занимающихся лечением запоя предлагаются различные программы, направленные на выведение из запоя и реабилитацию после запоя . Стоимость лечения алкоголизма варьируется в зависимости от выбранной клиники и уровня предоставляемых услуг . Цены на лечение алкоголизма могут включать консультацию нарколога, медицинскую помощь при запое, а также услуги по выводу из запоя . лечение запоя тула Наркологическая помощь в Туле включает в себя как стационарное, так и outpatient лечение, что также влияет на стоимость . Программа лечения алкоголизма разрабатывается индивидуально и может включать детоксикацию, психологическую поддержку и реабилитацию . Комплексный подход к лечению зависимостей в Туле позволяет не только остановить алкогольное потребление, но и минимизировать риск рецидивов. Поэтому, если вам или вашим близким нужна помощь , важно не откладывать обращение к профессионалам.

InternetDrync 12 сентября, 2025

проверить интернет по адресу inernetvkvartiru-nizhnij-novgorod006.ru интернет по адресу дома

Cesarrebra 12 сентября, 2025

sochistroygroup.ru - строительные советы онлайн

Cesarrebra 12 сентября, 2025

коттедж под ключ сочи - Коттедж под ключ с энергосберегающими технологиями

Alkogolizmomsklic 12 сентября, 2025

вывод из запоя круглосуточно омск vivod-iz-zapoya-omsk008.ru вывод из запоя круглосуточно омск

Cesarrebra 12 сентября, 2025

https://www.sochistroygroup.ru - страница компании

Izzapoyatulalic 12 сентября, 2025

Услуга прокапки от запоя в Туле – это востребованная услуга, обеспечивающая возможность пациентам получить квалифицированную медицинскую помощь прямо у себя дома. Специалист по наркологии, который оказывает наркологические услуги, поможет облегчить симптомы абстиненции и позаботится о комфорте пациента. врач нарколог на дом Стоимость капельниц могут изменяться в зависимости от медицинского учреждения и сложности оказания услуг. Например, расходы на лечение алкогольной зависимости может предполагать не только прокапку дополнительные антиалкогольные процедуры, а также реабилитацию пациентов с алкоголизмом. На территории Тулы услуги нарколога доступны как в стационарных учреждениях, так и в выездном формате. Средняя цена капельницы от запоя может составлять от 2000 до 5000 рублей. При этом важно учитывать, что стоимость лечения может зависеть от используемых препаратов и индивидуальных запросов пациента. Наркологическая клиника Тула предоставляет разнообразные пакеты услуг, которые могут включать в себя терапию при запое и последующую реабилитацию. Если обратиться к наркологу на дом, вы получите квалифицированную помощь при алкоголизме и избежите необходимости оставаться в стационаре. Имейте в виду, что своевременное обращение за медицинской помощью может значительно помочь в процессе выздоровления.

Cesarrebra 12 сентября, 2025

дом из газобетона сочи - Энергоэффективный дом из газобетона

InternetDrync 12 сентября, 2025

провайдеры интернета новосибирск inernetvkvartiru-novosibirsk004.ru какие провайдеры на адресе в новосибирске

Cesarrebra 12 сентября, 2025

https://www.sochistroygroup.ru/ - советы для семьи

Zapojomsklic 12 сентября, 2025

вывод из запоя омск vivod-iz-zapoya-omsk009.ru вывод из запоя цена

Cesarrebra 12 сентября, 2025

http://sochistroygroup.ru - от идеи до дома

Cesarrebra 12 сентября, 2025

https://www.sochistroygroup.ru - строительный рынок

Zapojvladimirlic 12 сентября, 2025

Наркологическая помощь доступна 24/7 на сайте vivod-iz-zapoya-vladimir010.ru. Наша наркологическая клиника предлагает высококвалифицированную помощь в лечении зависимостей, включая зависимость от алкоголя и наркотическую. Мы обеспечиваем анонимное лечение и детоксикацию организмаобеспечивая полную конфиденциальность. В нашем центре вы можете воспользоваться консультациями психолога и психологическую поддержку. Процесс реабилитации наркозависимых включает программу комплексного восстановления с акцентом на поддержку семьи. Мы предоставляем высококачественные медицинские услуги для успешного преодоления зависимости.

Cesarrebra 12 сентября, 2025

http://sochistroygroup.ru - идеи для ремонта

InternetDrync 12 сентября, 2025

подключить проводной интернет новосибирск inernetvkvartiru-novosibirsk005.ru проверить провайдера по адресу

Cesarrebra 12 сентября, 2025

http://www.sochistroygroup.ru - строительные наработки

Cesarrebra 13 сентября, 2025

sochistroygroup.ru/ - идеальные проекты

Zapojorenburglic 13 сентября, 2025

вывод из запоя цена vivod-iz-zapoya-orenburg007.ru вывод из запоя круглосуточно оренбург

Vivodzapojvladimirlic 13 сентября, 2025

Подбор клиники для вывода из запоя в владимире – важный шаг‚ который требует внимания к нескольким аспектам‚ включая стоимость услуг и уровень медицинского обслуживания. При выборе клиники необходимо обратить внимание на следующие моменты. Во-первых‚ обратите внимание на наркологическими услугами‚ которые предлагает учреждение. Многие частные клиники владимира представляют квалифицированных профессиональных наркологов‚ которые могут обеспечить срочную помощь нарколога на дом. Это особенно важно‚ если состояние пациента нуждается в оперативном решении.Во-вторых‚ изучите отклики о клиниках владимира. Это поможет понять‚ как другие пациенты оценили качество оказанной помощи и эффективность терапии. Не забудьте узнать о ценах на услуги вывода из запоя – цены могут варьироваться в зависимости от качества обслуживания и используемых терапий. Также стоит рассмотреть программы реабилитации от алкоголизма и другие предложения‚ такие как терапия алкогольной зависимости. Некоторые клиники используют интегрированные методы‚ что может быть достаточно результативным. Важно помнить‚ что доступные цены на лечение не всегда гарантируют хорошее качество. Выбирая клинику‚ ищите оптимальное соотношение цены и качества при алкоголизме. Не стесняйтесь информировать себя о методах лечения и услугах‚ доступных в учреждении. нарколог на дом срочно

Cesarrebra 13 сентября, 2025

https://www.sochistroygroup.ru - строительные концепции

Cesarrebra 13 сентября, 2025

https://www.sochistroygroup.ru/ - онлайн-библиотека

InternetDrync 13 сентября, 2025

провайдеры новосибирск inernetvkvartiru-novosibirsk006.ru домашний интернет тарифы

Cesarrebra 13 сентября, 2025

sochistroygroup.ru - строительный журнал

Krakrlib 13 сентября, 2025

KRAKEN всегда открыт через krakr.cc/, это рабочее решение.

Krakrlib 13 сентября, 2025